-

WebRTC音视频通话-WebRTC视频自定义RTCVideoCapturer相机

WebRTC音视频通话-WebRTC视频自定义RTCVideoCapturer相机

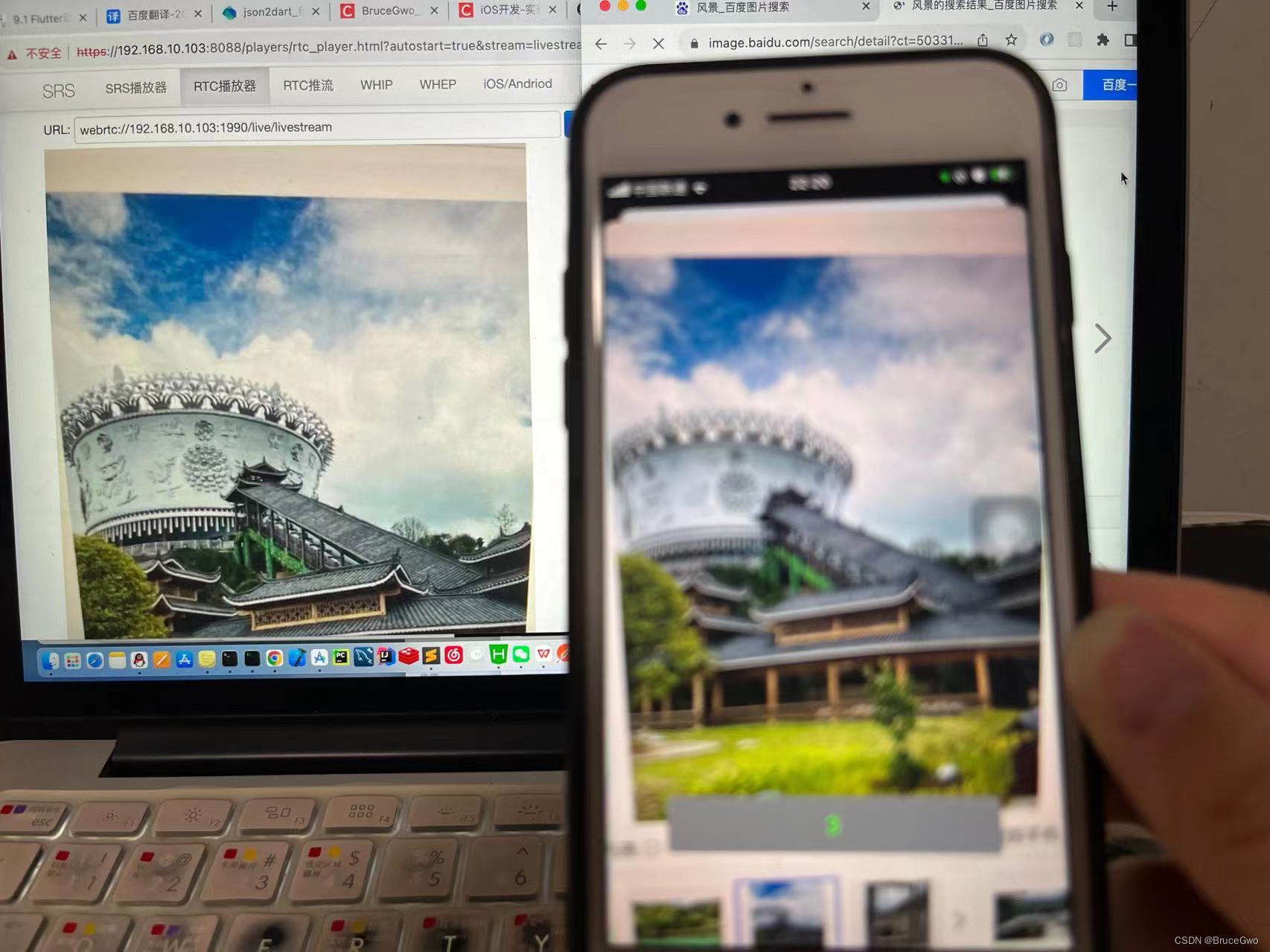

在之前已经实现了WebRTC调用ossrs服务,实现直播视频通话功能。但是在使用过程中,RTCCameraVideoCapturer类提供的方法不能修改及调节相机的灯光等设置,那就需要自定义RTCVideoCapturer自行采集画面了。

iOS端WebRTC调用ossrs相关,实现直播视频通话功能请查看:

https://blog.csdn.net/gloryFlow/article/details/132262724这里自定义RTCVideoCapturer

一、自定义相机需要的几个类

需要了解的几个类

-

AVCaptureSession

AVCaptureSession是iOS提供的一个管理和协调输入设备到输出设备之间数据流的对象。 -

AVCaptureDevice

AVCaptureDevice是指硬件设备。 -

AVCaptureDeviceInput

AVCaptureDeviceInput是用来从AVCaptureDevice对象捕获Input数据。 -

AVCaptureMetadataOutput

AVCaptureMetadataOutput是用来处理AVCaptureSession产生的定时元数据的捕获输出的。 -

AVCaptureVideoDataOutput

AVCaptureVideoDataOutput是用来处理视频数据输出的。 -

AVCaptureVideoPreviewLayer

AVCaptureVideoPreviewLayer是相机捕获的视频预览层,是用来展示视频的。

二、自定义RTCVideoCapturer相机采集

自定义相机,我们需要为AVCaptureSession添加AVCaptureVideoDataOutput的output

self.dataOutput = [[AVCaptureVideoDataOutput alloc] init]; [self.dataOutput setAlwaysDiscardsLateVideoFrames:YES]; self.dataOutput.videoSettings = @{(id)kCVPixelBufferPixelFormatTypeKey : @(needYuvOutput ? kCVPixelFormatType_420YpCbCr8BiPlanarFullRange : kCVPixelFormatType_32BGRA)}; self.dataOutput.alwaysDiscardsLateVideoFrames = YES; [self.dataOutput setSampleBufferDelegate:self queue:self.bufferQueue]; if ([self.session canAddOutput:self.dataOutput]) { [self.session addOutput:self.dataOutput]; }else{ NSLog( @"Could not add video data output to the session" ); return nil; }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

需要实现AVCaptureVideoDataOutputSampleBufferDelegate代理方法

- (void)captureOutput:(AVCaptureOutput *)captureOutput didOutputSampleBuffer:(CMSampleBufferRef)sampleBuffer fromConnection:(AVCaptureConnection *)connection;- 1

将CMSampleBufferRef转换成CVPixelBufferRef,将处理后的CVPixelBufferRef生成RTCVideoFrame,通过调用WebRTC的localVideoSource中实现的didCaptureVideoFrame方法。

完整代码如下

SDCustomRTCCameraCapturer.h

#import <AVFoundation/AVFoundation.h> #import <Foundation/Foundation.h> #import <WebRTC/WebRTC.h> @protocol SDCustomRTCCameraCapturerDelegate <NSObject> - (void)rtcCameraVideoCapturer:(RTCVideoCapturer *)capturer didOutputSampleBuffer:(CMSampleBufferRef)sampleBuffer; @end @interface SDCustomRTCCameraCapturer : RTCVideoCapturer @property(nonatomic, weak) id<SDCustomRTCCameraCapturerDelegate>delegate; // Capture session that is used for capturing. Valid from initialization to dealloc. @property(nonatomic, strong) AVCaptureSession *captureSession; // Returns list of available capture devices that support video capture. + (NSArray<AVCaptureDevice *> *)captureDevices; // Returns list of formats that are supported by this class for this device. + (NSArray<AVCaptureDeviceFormat *> *)supportedFormatsForDevice:(AVCaptureDevice *)device; // Returns the most efficient supported output pixel format for this capturer. - (FourCharCode)preferredOutputPixelFormat; // Starts the capture session asynchronously and notifies callback on completion. // The device will capture video in the format given in the `format` parameter. If the pixel format // in `format` is supported by the WebRTC pipeline, the same pixel format will be used for the // output. Otherwise, the format returned by `preferredOutputPixelFormat` will be used. - (void)startCaptureWithDevice:(AVCaptureDevice *)device format:(AVCaptureDeviceFormat *)format fps:(NSInteger)fps completionHandler:(nullable void (^)(NSError *))completionHandler; // Stops the capture session asynchronously and notifies callback on completion. - (void)stopCaptureWithCompletionHandler:(nullable void (^)(void))completionHandler; // Starts the capture session asynchronously. - (void)startCaptureWithDevice:(AVCaptureDevice *)device format:(AVCaptureDeviceFormat *)format fps:(NSInteger)fps; // Stops the capture session asynchronously. - (void)stopCapture; #pragma mark - 自定义相机 @property (nonatomic, readonly) dispatch_queue_t bufferQueue; @property (nonatomic, assign) AVCaptureDevicePosition devicePosition; // default AVCaptureDevicePositionFront @property (nonatomic, assign) AVCaptureVideoOrientation videoOrientation; @property (nonatomic, assign) BOOL needVideoMirrored; @property (nonatomic, strong , readonly) AVCaptureConnection *videoConnection; @property (nonatomic, copy) NSString *sessionPreset; // default 640x480 @property (nonatomic, strong) AVCaptureVideoPreviewLayer *previewLayer; @property (nonatomic, assign) BOOL bSessionPause; @property (nonatomic, assign) int iExpectedFPS; @property (nonatomic, readwrite, strong) NSDictionary *videoCompressingSettings; - (instancetype)initWithDevicePosition:(AVCaptureDevicePosition)iDevicePosition sessionPresset:(AVCaptureSessionPreset)sessionPreset fps:(int)iFPS needYuvOutput:(BOOL)needYuvOutput; - (void)setExposurePoint:(CGPoint)point inPreviewFrame:(CGRect)frame; - (void)setISOValue:(float)value; - (void)startRunning; - (void)stopRunning; - (void)rotateCamera; - (void)rotateCamera:(BOOL)isUseFrontCamera; - (void)setWhiteBalance; - (CGRect)getZoomedRectWithRect:(CGRect)rect scaleToFit:(BOOL)bScaleToFit; @end- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

SDCustomRTCCameraCapturer.m

#import "SDCustomRTCCameraCapturer.h" #import <UIKit/UIKit.h> #import "EFMachineVersion.h" //#import "base/RTCLogging.h" //#import "base/RTCVideoFrameBuffer.h" //#import "components/video_frame_buffer/RTCCVPixelBuffer.h" // //#if TARGET_OS_IPHONE //#import "helpers/UIDevice+RTCDevice.h" //#endif // //#import "helpers/AVCaptureSession+DevicePosition.h" //#import "helpers/RTCDispatcher+Private.h" typedef NS_ENUM(NSUInteger, STExposureModel) { STExposureModelPositive5, STExposureModelPositive4, STExposureModelPositive3, STExposureModelPositive2, STExposureModelPositive1, STExposureModel0, STExposureModelNegative1, STExposureModelNegative2, STExposureModelNegative3, STExposureModelNegative4, STExposureModelNegative5, STExposureModelNegative6, STExposureModelNegative7, STExposureModelNegative8, }; static char * kEffectsCamera = "EffectsCamera"; static STExposureModel currentExposureMode; @interface SDCustomRTCCameraCapturer ()<AVCaptureVideoDataOutputSampleBufferDelegate, AVCaptureAudioDataOutputSampleBufferDelegate, AVCaptureMetadataOutputObjectsDelegate> @property(nonatomic, readonly) dispatch_queue_t frameQueue; @property(nonatomic, strong) AVCaptureDevice *currentDevice; @property(nonatomic, assign) BOOL hasRetriedOnFatalError; @property(nonatomic, assign) BOOL isRunning; // Will the session be running once all asynchronous operations have been completed? @property(nonatomic, assign) BOOL willBeRunning; @property (nonatomic, strong) AVCaptureDeviceInput *deviceInput; @property (nonatomic, strong) AVCaptureVideoDataOutput *dataOutput; @property (nonatomic, strong) AVCaptureMetadataOutput *metaOutput; @property (nonatomic, strong) AVCaptureStillImageOutput *stillImageOutput; @property (nonatomic, readwrite) dispatch_queue_t bufferQueue; @property (nonatomic, strong, readwrite) AVCaptureConnection *videoConnection; @property (nonatomic, strong) AVCaptureDevice *videoDevice; @property (nonatomic, strong) AVCaptureSession *session; @end @implementation SDCustomRTCCameraCapturer { AVCaptureVideoDataOutput *_videoDataOutput; AVCaptureSession *_captureSession; FourCharCode _preferredOutputPixelFormat; FourCharCode _outputPixelFormat; RTCVideoRotation _rotation; float _autoISOValue; #if TARGET_OS_IPHONE UIDeviceOrientation _orientation; BOOL _generatingOrientationNotifications; #endif } @synthesize frameQueue = _frameQueue; @synthesize captureSession = _captureSession; @synthesize currentDevice = _currentDevice; @synthesize hasRetriedOnFatalError = _hasRetriedOnFatalError; @synthesize isRunning = _isRunning; @synthesize willBeRunning = _willBeRunning; - (instancetype)init { return [self initWithDelegate:nil captureSession:[[AVCaptureSession alloc] init]]; } - (instancetype)initWithDelegate:(__weak id<RTCVideoCapturerDelegate>)delegate { return [self initWithDelegate:delegate captureSession:[[AVCaptureSession alloc] init]]; } // This initializer is used for testing. - (instancetype)initWithDelegate:(__weak id<RTCVideoCapturerDelegate>)delegate captureSession:(AVCaptureSession *)captureSession { if (self = [super initWithDelegate:delegate]) { // Create the capture session and all relevant inputs and outputs. We need // to do this in init because the application may want the capture session // before we start the capturer for e.g. AVCapturePreviewLayer. All objects // created here are retained until dealloc and never recreated. if (![self setupCaptureSession:captureSession]) { return nil; } NSNotificationCenter *center = [NSNotificationCenter defaultCenter]; #if TARGET_OS_IPHONE _orientation = UIDeviceOrientationPortrait; _rotation = RTCVideoRotation_90; [center addObserver:self selector:@selector(deviceOrientationDidChange:) name:UIDeviceOrientationDidChangeNotification object:nil]; [center addObserver:self selector:@selector(handleCaptureSessionInterruption:) name:AVCaptureSessionWasInterruptedNotification object:_captureSession]; [center addObserver:self selector:@selector(handleCaptureSessionInterruptionEnded:) name:AVCaptureSessionInterruptionEndedNotification object:_captureSession]; [center addObserver:self selector:@selector(handleApplicationDidBecomeActive:) name:UIApplicationDidBecomeActiveNotification object:[UIApplication sharedApplication]]; #endif [center addObserver:self selector:@selector(handleCaptureSessionRuntimeError:) name:AVCaptureSessionRuntimeErrorNotification object:_captureSession]; [center addObserver:self selector:@selector(handleCaptureSessionDidStartRunning:) name:AVCaptureSessionDidStartRunningNotification object:_captureSession]; [center addObserver:self selector:@selector(handleCaptureSessionDidStopRunning:) name:AVCaptureSessionDidStopRunningNotification object:_captureSession]; } return self; } //- (void)dealloc { // NSAssert( // !_willBeRunning, // @"Session was still running in RTCCameraVideoCapturer dealloc. Forgot to call stopCapture?"); // [[NSNotificationCenter defaultCenter] removeObserver:self]; //} + (NSArray<AVCaptureDevice *> *)captureDevices { #if defined(WEBRTC_IOS) && defined(__IPHONE_10_0) && \ __IPHONE_OS_VERSION_MIN_REQUIRED >= __IPHONE_10_0 AVCaptureDeviceDiscoverySession *session = [AVCaptureDeviceDiscoverySession discoverySessionWithDeviceTypes:@[ AVCaptureDeviceTypeBuiltInWideAngleCamera ] mediaType:AVMediaTypeVideo position:AVCaptureDevicePositionUnspecified]; return session.devices; #else return [AVCaptureDevice devicesWithMediaType:AVMediaTypeVideo]; #endif } + (NSArray<AVCaptureDeviceFormat *> *)supportedFormatsForDevice:(AVCaptureDevice *)device { // Support opening the device in any format. We make sure it's converted to a format we // can handle, if needed, in the method `-setupVideoDataOutput`. return device.formats; } - (FourCharCode)preferredOutputPixelFormat { return _preferredOutputPixelFormat; } - (void)startCaptureWithDevice:(AVCaptureDevice *)device format:(AVCaptureDeviceFormat *)format fps:(NSInteger)fps { [self startCaptureWithDevice:device format:format fps:fps completionHandler:nil]; } - (void)stopCapture { [self stopCaptureWithCompletionHandler:nil]; } - (void)startCaptureWithDevice:(AVCaptureDevice *)device format:(AVCaptureDeviceFormat *)format fps:(NSInteger)fps completionHandler:(nullable void (^)(NSError *))completionHandler { _willBeRunning = YES; [RTCDispatcher dispatchAsyncOnType:RTCDispatcherTypeCaptureSession block:^{ RTCLogInfo("startCaptureWithDevice %@ @ %ld fps", format, (long)fps); #if TARGET_OS_IPHONE dispatch_async(dispatch_get_main_queue(), ^{ if (!self->_generatingOrientationNotifications) { [[UIDevice currentDevice] beginGeneratingDeviceOrientationNotifications]; self->_generatingOrientationNotifications = YES; } }); #endif self.currentDevice = device; NSError *error = nil; if (![self.currentDevice lockForConfiguration:&error]) { RTCLogError(@"Failed to lock device %@. Error: %@", self.currentDevice, error.userInfo); if (completionHandler) { completionHandler(error); } self.willBeRunning = NO; return; } [self reconfigureCaptureSessionInput]; [self updateOrientation]; [self updateDeviceCaptureFormat:format fps:fps]; [self updateVideoDataOutputPixelFormat:format]; [self.captureSession startRunning]; [self.currentDevice unlockForConfiguration]; self.isRunning = YES; if (completionHandler) { completionHandler(nil); } }]; } - (void)stopCaptureWithCompletionHandler:(nullable void (^)(void))completionHandler { _willBeRunning = NO; [RTCDispatcher dispatchAsyncOnType:RTCDispatcherTypeCaptureSession block:^{ RTCLogInfo("Stop"); self.currentDevice = nil; for (AVCaptureDeviceInput *oldInput in [self.captureSession.inputs copy]) { [self.captureSession removeInput:oldInput]; } [self.captureSession stopRunning]; #if TARGET_OS_IPHONE dispatch_async(dispatch_get_main_queue(), ^{ if (self->_generatingOrientationNotifications) { [[UIDevice currentDevice] endGeneratingDeviceOrientationNotifications]; self->_generatingOrientationNotifications = NO; } }); #endif self.isRunning = NO; if (completionHandler) { completionHandler(); } }]; } #pragma mark iOS notifications #if TARGET_OS_IPHONE - (void)deviceOrientationDidChange:(NSNotification *)notification { [RTCDispatcher dispatchAsyncOnType:RTCDispatcherTypeCaptureSession block:^{ [self updateOrientation]; }]; } #endif #pragma mark AVCaptureVideoDataOutputSampleBufferDelegate //- (void)captureOutput:(AVCaptureOutput *)captureOutput // didOutputSampleBuffer:(CMSampleBufferRef)sampleBuffer // fromConnection:(AVCaptureConnection *)connection { // NSParameterAssert(captureOutput == _videoDataOutput); // // if (CMSampleBufferGetNumSamples(sampleBuffer) != 1 || !CMSampleBufferIsValid(sampleBuffer) || // !CMSampleBufferDataIsReady(sampleBuffer)) { // return; // } // // CVPixelBufferRef pixelBuffer = CMSampleBufferGetImageBuffer(sampleBuffer); // if (pixelBuffer == nil) { // return; // } // //#if TARGET_OS_IPHONE // // Default to portrait orientation on iPhone. // BOOL usingFrontCamera = NO; // // Check the image's EXIF for the camera the image came from as the image could have been // // delayed as we set alwaysDiscardsLateVideoFrames to NO. // // AVCaptureDeviceInput *deviceInput = // (AVCaptureDeviceInput *)((AVCaptureInputPort *)connection.inputPorts.firstObject).input; // usingFrontCamera = AVCaptureDevicePositionFront == deviceInput.device.position; // // switch (_orientation) { // case UIDeviceOrientationPortrait: // _rotation = RTCVideoRotation_90; // break; // case UIDeviceOrientationPortraitUpsideDown: // _rotation = RTCVideoRotation_270; // break; // case UIDeviceOrientationLandscapeLeft: // _rotation = usingFrontCamera ? RTCVideoRotation_180 : RTCVideoRotation_0; // break; // case UIDeviceOrientationLandscapeRight: // _rotation = usingFrontCamera ? RTCVideoRotation_0 : RTCVideoRotation_180; // break; // case UIDeviceOrientationFaceUp: // case UIDeviceOrientationFaceDown: // case UIDeviceOrientationUnknown: // // Ignore. // break; // } //#else // // No rotation on Mac. // _rotation = RTCVideoRotation_0; //#endif // // if (self.delegate && [self.delegate respondsToSelector:@selector(rtcCameraVideoCapturer:didOutputSampleBuffer:)]) { // [self.delegate rtcCameraVideoCapturer:self didOutputSampleBuffer:sampleBuffer]; // } //} #pragma mark - AVCaptureSession notifications - (void)handleCaptureSessionInterruption:(NSNotification *)notification { NSString *reasonString = nil; #if TARGET_OS_IPHONE NSNumber *reason = notification.userInfo[AVCaptureSessionInterruptionReasonKey]; if (reason) { switch (reason.intValue) { case AVCaptureSessionInterruptionReasonVideoDeviceNotAvailableInBackground: reasonString = @"VideoDeviceNotAvailableInBackground"; break; case AVCaptureSessionInterruptionReasonAudioDeviceInUseByAnotherClient: reasonString = @"AudioDeviceInUseByAnotherClient"; break; case AVCaptureSessionInterruptionReasonVideoDeviceInUseByAnotherClient: reasonString = @"VideoDeviceInUseByAnotherClient"; break; case AVCaptureSessionInterruptionReasonVideoDeviceNotAvailableWithMultipleForegroundApps: reasonString = @"VideoDeviceNotAvailableWithMultipleForegroundApps"; break; } } #endif RTCLog(@"Capture session interrupted: %@", reasonString); } - (void)handleCaptureSessionInterruptionEnded:(NSNotification *)notification { RTCLog(@"Capture session interruption ended."); } - (void)handleCaptureSessionRuntimeError:(NSNotification *)notification { NSError *error = [notification.userInfo objectForKey:AVCaptureSessionErrorKey]; RTCLogError(@"Capture session runtime error: %@", error); [RTCDispatcher dispatchAsyncOnType:RTCDispatcherTypeCaptureSession block:^{ #if TARGET_OS_IPHONE if (error.code == AVErrorMediaServicesWereReset) { [self handleNonFatalError]; } else { [self handleFatalError]; } #else [self handleFatalError]; #endif }]; } - (void)handleCaptureSessionDidStartRunning:(NSNotification *)notification { RTCLog(@"Capture session started."); [RTCDispatcher dispatchAsyncOnType:RTCDispatcherTypeCaptureSession block:^{ // If we successfully restarted after an unknown error, // allow future retries on fatal errors. self.hasRetriedOnFatalError = NO; }]; } - (void)handleCaptureSessionDidStopRunning:(NSNotification *)notification { RTCLog(@"Capture session stopped."); } - (void)handleFatalError { [RTCDispatcher dispatchAsyncOnType:RTCDispatcherTypeCaptureSession block:^{ if (!self.hasRetriedOnFatalError) { RTCLogWarning(@"Attempting to recover from fatal capture error."); [self handleNonFatalError]; self.hasRetriedOnFatalError = YES; } else { RTCLogError(@"Previous fatal error recovery failed."); } }]; } - (void)handleNonFatalError { [RTCDispatcher dispatchAsyncOnType:RTCDispatcherTypeCaptureSession block:^{ RTCLog(@"Restarting capture session after error."); if (self.isRunning) { [self.captureSession startRunning]; } }]; } #if TARGET_OS_IPHONE #pragma mark - UIApplication notifications - (void)handleApplicationDidBecomeActive:(NSNotification *)notification { [RTCDispatcher dispatchAsyncOnType:RTCDispatcherTypeCaptureSession block:^{ if (self.isRunning && !self.captureSession.isRunning) { RTCLog(@"Restarting capture session on active."); [self.captureSession startRunning]; } }]; } #endif // TARGET_OS_IPHONE #pragma mark - Private - (dispatch_queue_t)frameQueue { if (!_frameQueue) { _frameQueue = dispatch_queue_create("org.webrtc.cameravideocapturer.video", DISPATCH_QUEUE_SERIAL); dispatch_set_target_queue(_frameQueue, dispatch_get_global_queue(DISPATCH_QUEUE_PRIORITY_HIGH, 0)); } return _frameQueue; } - (BOOL)setupCaptureSession:(AVCaptureSession *)captureSession { NSAssert(_captureSession == nil, @"Setup capture session called twice."); _captureSession = captureSession; #if defined(WEBRTC_IOS) _captureSession.sessionPreset = AVCaptureSessionPresetInputPriority; _captureSession.usesApplicationAudioSession = NO; #endif [self setupVideoDataOutput]; // Add the output. if (![_captureSession canAddOutput:_videoDataOutput]) { RTCLogError(@"Video data output unsupported."); return NO; } [_captureSession addOutput:_videoDataOutput]; return YES; } - (void)setupVideoDataOutput { NSAssert(_videoDataOutput == nil, @"Setup video data output called twice."); AVCaptureVideoDataOutput *videoDataOutput = [[AVCaptureVideoDataOutput alloc] init]; // `videoDataOutput.availableVideoCVPixelFormatTypes` returns the pixel formats supported by the // device with the most efficient output format first. Find the first format that we support. NSSet<NSNumber *> *supportedPixelFormats = [RTCCVPixelBuffer supportedPixelFormats]; NSMutableOrderedSet *availablePixelFormats = [NSMutableOrderedSet orderedSetWithArray:videoDataOutput.availableVideoCVPixelFormatTypes]; [availablePixelFormats intersectSet:supportedPixelFormats]; NSNumber *pixelFormat = availablePixelFormats.firstObject; NSAssert(pixelFormat, @"Output device has no supported formats."); _preferredOutputPixelFormat = [pixelFormat unsignedIntValue]; _outputPixelFormat = _preferredOutputPixelFormat; // videoDataOutput.videoSettings = @{(NSString *)kCVPixelBufferPixelFormatTypeKey : pixelFormat}; // [videoDataOutput setAlwaysDiscardsLateVideoFrames:YES]; videoDataOutput.videoSettings = @{(id)kCVPixelBufferPixelFormatTypeKey : @(kCVPixelFormatType_32BGRA)}; videoDataOutput.alwaysDiscardsLateVideoFrames = YES; [videoDataOutput setSampleBufferDelegate:self queue:self.frameQueue]; _videoDataOutput = videoDataOutput; // // 设置视频方向和旋转 // AVCaptureConnection *connection = [videoDataOutput connectionWithMediaType:AVMediaTypeVideo]; // [connection setVideoOrientation:AVCaptureVideoOrientationPortrait]; // [connection setVideoMirrored:NO]; } - (void)updateVideoDataOutputPixelFormat:(AVCaptureDeviceFormat *)format { // FourCharCode mediaSubType = CMFormatDescriptionGetMediaSubType(format.formatDescription); // if (![[RTCCVPixelBuffer supportedPixelFormats] containsObject:@(mediaSubType)]) { // mediaSubType = _preferredOutputPixelFormat; // } // // if (mediaSubType != _outputPixelFormat) { // _outputPixelFormat = mediaSubType; // _videoDataOutput.videoSettings = // @{ (NSString *)kCVPixelBufferPixelFormatTypeKey : @(mediaSubType) }; // } } #pragma mark - Private, called inside capture queue - (void)updateDeviceCaptureFormat:(AVCaptureDeviceFormat *)format fps:(NSInteger)fps { NSAssert([RTCDispatcher isOnQueueForType:RTCDispatcherTypeCaptureSession], @"updateDeviceCaptureFormat must be called on the capture queue."); @try { _currentDevice.activeFormat = format; _currentDevice.activeVideoMinFrameDuration = CMTimeMake(1, fps); } @catch (NSException *exception) { RTCLogError(@"Failed to set active format!\n User info:%@", exception.userInfo); return; } } - (void)reconfigureCaptureSessionInput { NSAssert([RTCDispatcher isOnQueueForType:RTCDispatcherTypeCaptureSession], @"reconfigureCaptureSessionInput must be called on the capture queue."); NSError *error = nil; AVCaptureDeviceInput *input = [AVCaptureDeviceInput deviceInputWithDevice:_currentDevice error:&error]; if (!input) { RTCLogError(@"Failed to create front camera input: %@", error.localizedDescription); return; } [_captureSession beginConfiguration]; for (AVCaptureDeviceInput *oldInput in [_captureSession.inputs copy]) { [_captureSession removeInput:oldInput]; } if ([_captureSession canAddInput:input]) { [_captureSession addInput:input]; } else { RTCLogError(@"Cannot add camera as an input to the session."); } [_captureSession commitConfiguration]; } - (void)updateOrientation { NSAssert([RTCDispatcher isOnQueueForType:RTCDispatcherTypeCaptureSession], @"updateOrientation must be called on the capture queue."); #if TARGET_OS_IPHONE _orientation = [UIDevice currentDevice].orientation; #endif } - (instancetype)initWithDevicePosition:(AVCaptureDevicePosition)iDevicePosition sessionPresset:(AVCaptureSessionPreset)sessionPreset fps:(int)iFPS needYuvOutput:(BOOL)needYuvOutput { self = [super init]; if (self) { self.bSessionPause = YES; self.bufferQueue = dispatch_queue_create("STCameraBufferQueue", NULL); self.session = [[AVCaptureSession alloc] init]; self.videoDevice = [self cameraDeviceWithPosition:iDevicePosition]; _devicePosition = iDevicePosition; NSError *error = nil; self.deviceInput = [AVCaptureDeviceInput deviceInputWithDevice:self.videoDevice error:&error]; if (!self.deviceInput || error) { NSLog(@"create input error"); return nil; } self.dataOutput = [[AVCaptureVideoDataOutput alloc] init]; [self.dataOutput setAlwaysDiscardsLateVideoFrames:YES]; self.dataOutput.videoSettings = @{(id)kCVPixelBufferPixelFormatTypeKey : @(needYuvOutput ? kCVPixelFormatType_420YpCbCr8BiPlanarFullRange : kCVPixelFormatType_32BGRA)}; self.dataOutput.alwaysDiscardsLateVideoFrames = YES; [self.dataOutput setSampleBufferDelegate:self queue:self.bufferQueue]; self.metaOutput = [[AVCaptureMetadataOutput alloc] init]; [self.metaOutput setMetadataObjectsDelegate:self queue:self.bufferQueue]; self.stillImageOutput = [[AVCaptureStillImageOutput alloc] init]; self.stillImageOutput.outputSettings = @{AVVideoCodecKey : AVVideoCodecJPEG}; if ([self.stillImageOutput respondsToSelector:@selector(setHighResolutionStillImageOutputEnabled:)]) { self.stillImageOutput.highResolutionStillImageOutputEnabled = YES; } [self.session beginConfiguration]; if ([self.session canAddInput:self.deviceInput]) { [self.session addInput:self.deviceInput]; }else{ NSLog( @"Could not add device input to the session" ); return nil; } if ([self.session canSetSessionPreset:sessionPreset]) { [self.session setSessionPreset:sessionPreset]; _sessionPreset = sessionPreset; }else if([self.session canSetSessionPreset:AVCaptureSessionPreset1920x1080]){ [self.session setSessionPreset:AVCaptureSessionPreset1920x1080]; _sessionPreset = AVCaptureSessionPreset1920x1080; }else if([self.session canSetSessionPreset:AVCaptureSessionPreset1280x720]){ [self.session setSessionPreset:AVCaptureSessionPreset1280x720]; _sessionPreset = AVCaptureSessionPreset1280x720; }else{ [self.session setSessionPreset:AVCaptureSessionPreset640x480]; _sessionPreset = AVCaptureSessionPreset640x480; } if ([self.session canAddOutput:self.dataOutput]) { [self.session addOutput:self.dataOutput]; }else{ NSLog( @"Could not add video data output to the session" ); return nil; } if ([self.session canAddOutput:self.metaOutput]) { [self.session addOutput:self.metaOutput]; self.metaOutput.metadataObjectTypes = @[AVMetadataObjectTypeFace].copy; } if ([self.session canAddOutput:self.stillImageOutput]) { [self.session addOutput:self.stillImageOutput]; }else { NSLog(@"Could not add still image output to the session"); } self.videoConnection = [self.dataOutput connectionWithMediaType:AVMediaTypeVideo]; if ([self.videoConnection isVideoOrientationSupported]) { [self.videoConnection setVideoOrientation:AVCaptureVideoOrientationPortrait]; self.videoOrientation = AVCaptureVideoOrientationPortrait; } if ([self.videoConnection isVideoMirroringSupported]) { [self.videoConnection setVideoMirrored:YES]; self.needVideoMirrored = YES; } if ([_videoDevice lockForConfiguration:NULL] == YES) { // _videoDevice.activeFormat = bestFormat; _videoDevice.activeVideoMinFrameDuration = CMTimeMake(1, iFPS); _videoDevice.activeVideoMaxFrameDuration = CMTimeMake(1, iFPS); [_videoDevice unlockForConfiguration]; } [self.session commitConfiguration]; NSMutableDictionary *tmpSettings = [[self.dataOutput recommendedVideoSettingsForAssetWriterWithOutputFileType:AVFileTypeQuickTimeMovie] mutableCopy]; if (!EFMachineVersion.isiPhone5sOrLater) { NSNumber *tmpSettingValue = tmpSettings[AVVideoHeightKey]; tmpSettings[AVVideoHeightKey] = tmpSettings[AVVideoWidthKey]; tmpSettings[AVVideoWidthKey] = tmpSettingValue; } self.videoCompressingSettings = [tmpSettings copy]; self.iExpectedFPS = iFPS; [self addObservers]; } return self; } - (void)rotateCamera { if (self.devicePosition == AVCaptureDevicePositionFront) { self.devicePosition = AVCaptureDevicePositionBack; }else{ self.devicePosition = AVCaptureDevicePositionFront; } } - (void)rotateCamera:(BOOL)isUseFrontCamera { if (isUseFrontCamera) { self.devicePosition = AVCaptureDevicePositionFront; }else{ self.devicePosition = AVCaptureDevicePositionBack; } } - (void)setExposurePoint:(CGPoint)point inPreviewFrame:(CGRect)frame { BOOL isFrontCamera = self.devicePosition == AVCaptureDevicePositionFront; float fX = point.y / frame.size.height; float fY = isFrontCamera ? point.x / frame.size.width : (1 - point.x / frame.size.width); [self focusWithMode:self.videoDevice.focusMode exposureMode:self.videoDevice.exposureMode atPoint:CGPointMake(fX, fY)]; } - (void)focusWithMode:(AVCaptureFocusMode)focusMode exposureMode:(AVCaptureExposureMode)exposureMode atPoint:(CGPoint)point{ NSError *error = nil; AVCaptureDevice * device = self.videoDevice; if ( [device lockForConfiguration:&error] ) { device.exposureMode = AVCaptureExposureModeContinuousAutoExposure; // - (void)setISOValue:(float)value{ [self setISOValue:0]; //device.exposureTargetBias // Setting (focus/exposure)PointOfInterest alone does not initiate a (focus/exposure) operation // Call -set(Focus/Exposure)Mode: to apply the new point of interest if ( focusMode != AVCaptureFocusModeLocked && device.isFocusPointOfInterestSupported && [device isFocusModeSupported:focusMode] ) { device.focusPointOfInterest = point; device.focusMode = focusMode; } if ( exposureMode != AVCaptureExposureModeCustom && device.isExposurePointOfInterestSupported && [device isExposureModeSupported:exposureMode] ) { device.exposurePointOfInterest = point; device.exposureMode = exposureMode; } device.subjectAreaChangeMonitoringEnabled = YES; [device unlockForConfiguration]; } } - (void)setWhiteBalance{ [self changeDeviceProperty:^(AVCaptureDevice *captureDevice) { if ([captureDevice isWhiteBalanceModeSupported:AVCaptureWhiteBalanceModeContinuousAutoWhiteBalance]) { [captureDevice setWhiteBalanceMode:AVCaptureWhiteBalanceModeContinuousAutoWhiteBalance]; } }]; } - (void)changeDeviceProperty:(void(^)(AVCaptureDevice *))propertyChange{ AVCaptureDevice *captureDevice= self.videoDevice; NSError *error; if ([captureDevice lockForConfiguration:&error]) { propertyChange(captureDevice); [captureDevice unlockForConfiguration]; }else{ NSLog(@"设置设备属性过程发生错误,错误信息:%@",error.localizedDescription); } } - (void)setISOValue:(float)value exposeDuration:(int)duration{ float currentISO = (value < self.videoDevice.activeFormat.minISO) ? self.videoDevice.activeFormat.minISO: value; currentISO = value > self.videoDevice.activeFormat.maxISO ? self.videoDevice.activeFormat.maxISO : value; NSError *error; if ([self.videoDevice lockForConfiguration:&error]){ [self.videoDevice setExposureModeCustomWithDuration:AVCaptureExposureDurationCurrent ISO:currentISO completionHandler:nil]; [self.videoDevice unlockForConfiguration]; } } - (void)setISOValue:(float)value{ // float newVlaue = (value - 0.5) * (5.0 / 0.5); // mirror [0,1] to [-8,8] NSLog(@"%f", value); NSError *error = nil; if ( [self.videoDevice lockForConfiguration:&error] ) { [self.videoDevice setExposureTargetBias:value completionHandler:nil]; [self.videoDevice unlockForConfiguration]; } else { NSLog( @"Could not lock device for configuration: %@", error ); } } - (void)setDevicePosition:(AVCaptureDevicePosition)devicePosition { if (_devicePosition != devicePosition && devicePosition != AVCaptureDevicePositionUnspecified) { if (_session) { AVCaptureDevice *targetDevice = [self cameraDeviceWithPosition:devicePosition]; if (targetDevice && [self judgeCameraAuthorization]) { NSError *error = nil; AVCaptureDeviceInput *deviceInput = [[AVCaptureDeviceInput alloc] initWithDevice:targetDevice error:&error]; if(!deviceInput || error) { NSLog(@"Error creating capture device input: %@", error.localizedDescription); return; } _bSessionPause = YES; [_session beginConfiguration]; [_session removeInput:_deviceInput]; if ([_session canAddInput:deviceInput]) { [_session addInput:deviceInput]; _deviceInput = deviceInput; _videoDevice = targetDevice; _devicePosition = devicePosition; } _videoConnection = [_dataOutput connectionWithMediaType:AVMediaTypeVideo]; if ([_videoConnection isVideoOrientationSupported]) { [_videoConnection setVideoOrientation:_videoOrientation]; } if ([_videoConnection isVideoMirroringSupported]) { [_videoConnection setVideoMirrored:devicePosition == AVCaptureDevicePositionFront]; } [_session commitConfiguration]; [self setSessionPreset:_sessionPreset]; _bSessionPause = NO; } } } } - (void)setSessionPreset:(NSString *)sessionPreset { if (_session && _sessionPreset) { // if (![sessionPreset isEqualToString:_sessionPreset]) { _bSessionPause = YES; [_session beginConfiguration]; if ([_session canSetSessionPreset:sessionPreset]) { [_session setSessionPreset:sessionPreset]; _sessionPreset = sessionPreset; } [_session commitConfiguration]; self.videoCompressingSettings = [[self.dataOutput recommendedVideoSettingsForAssetWriterWithOutputFileType:AVFileTypeQuickTimeMovie] copy]; // [self setIExpectedFPS:_iExpectedFPS]; _bSessionPause = NO; // } } } - (void)setIExpectedFPS:(int)iExpectedFPS { _iExpectedFPS = iExpectedFPS; if (iExpectedFPS <= 0 || !_dataOutput.videoSettings || !_videoDevice) { return; } CGFloat fWidth = [[_dataOutput.videoSettings objectForKey:@"Width"] floatValue]; CGFloat fHeight = [[_dataOutput.videoSettings objectForKey:@"Height"] floatValue]; AVCaptureDeviceFormat *bestFormat = nil; AVFrameRateRange *bestFrameRateRange = nil; for (AVCaptureDeviceFormat *format in [_videoDevice formats]) { CMFormatDescriptionRef description = format.formatDescription; if (CMFormatDescriptionGetMediaSubType(description) != kCVPixelFormatType_420YpCbCr8BiPlanarFullRange) { continue; } CMVideoDimensions videoDimension = CMVideoFormatDescriptionGetDimensions(description); if ((videoDimension.width == fWidth && videoDimension.height == fHeight) || (videoDimension.height == fWidth && videoDimension.width == fHeight)) { for (AVFrameRateRange *range in format.videoSupportedFrameRateRanges) { if (range.maxFrameRate >= bestFrameRateRange.maxFrameRate) { bestFormat = format; bestFrameRateRange = range; } } } } if (bestFormat) { CMTime minFrameDuration; if (bestFrameRateRange.minFrameDuration.timescale / bestFrameRateRange.minFrameDuration.value < iExpectedFPS) { minFrameDuration = bestFrameRateRange.minFrameDuration; }else{ minFrameDuration = CMTimeMake(1, iExpectedFPS); } if ([_videoDevice lockForConfiguration:NULL] == YES) { _videoDevice.activeFormat = bestFormat; _videoDevice.activeVideoMinFrameDuration = minFrameDuration; _videoDevice.activeVideoMaxFrameDuration = minFrameDuration; [_videoDevice unlockForConfiguration]; } } } - (void)startRunning { if (![self judgeCameraAuthorization]) { return; } if (!self.dataOutput) { return; } if (self.session && ![self.session isRunning]) { if (self.bufferQueue) { dispatch_async(self.bufferQueue, ^{ [self.session startRunning]; }); } self.bSessionPause = NO; } } - (void)stopRunning { if (self.session && [self.session isRunning]) { if (self.bufferQueue) { dispatch_async(self.bufferQueue, ^{ [self.session stopRunning]; }); } self.bSessionPause = YES; } } - (CGRect)getZoomedRectWithRect:(CGRect)rect scaleToFit:(BOOL)bScaleToFit { CGRect rectRet = rect; if (self.dataOutput.videoSettings) { CGFloat fWidth = [[self.dataOutput.videoSettings objectForKey:@"Width"] floatValue]; CGFloat fHeight = [[self.dataOutput.videoSettings objectForKey:@"Height"] floatValue]; float fScaleX = fWidth / CGRectGetWidth(rect); float fScaleY = fHeight / CGRectGetHeight(rect); float fScale = bScaleToFit ? fmaxf(fScaleX, fScaleY) : fminf(fScaleX, fScaleY); fWidth /= fScale; fHeight /= fScale; CGFloat fX = rect.origin.x - (fWidth - rect.size.width) / 2.0f; CGFloat fY = rect.origin.y - (fHeight - rect.size.height) / 2.0f; rectRet = CGRectMake(fX, fY, fWidth, fHeight); } return rectRet; } - (BOOL)judgeCameraAuthorization { AVAuthorizationStatus authStatus = [AVCaptureDevice authorizationStatusForMediaType:AVMediaTypeVideo]; if (authStatus == AVAuthorizationStatusRestricted || authStatus == AVAuthorizationStatusDenied) { return NO; } return YES; } - (AVCaptureDevice *)cameraDeviceWithPosition:(AVCaptureDevicePosition)position { AVCaptureDevice *deviceRet = nil; if (position != AVCaptureDevicePositionUnspecified) { NSArray *devices = [AVCaptureDevice devicesWithMediaType:AVMediaTypeVideo]; for (AVCaptureDevice *device in devices) { if ([device position] == position) { deviceRet = device; } } } return deviceRet; } - (AVCaptureVideoPreviewLayer *)previewLayer { if (!_previewLayer) { _previewLayer = [[AVCaptureVideoPreviewLayer alloc] initWithSession:self.session]; } return _previewLayer; } - (void)snapStillImageCompletionHandler:(void (^)(CMSampleBufferRef imageDataSampleBuffer, NSError *error))handler { if ([self judgeCameraAuthorization]) { self.bSessionPause = YES; NSString *strSessionPreset = [self.sessionPreset mutableCopy]; self.sessionPreset = AVCaptureSessionPresetPhoto; // 改变preset会黑一下 [NSThread sleepForTimeInterval:0.3]; dispatch_async(self.bufferQueue, ^{ [self.stillImageOutput captureStillImageAsynchronouslyFromConnection:[self.stillImageOutput connectionWithMediaType:AVMediaTypeVideo] completionHandler:^(CMSampleBufferRef imageDataSampleBuffer, NSError *error) { self.bSessionPause = NO; self.sessionPreset = strSessionPreset; handler(imageDataSampleBuffer , error); }]; } ); } } BOOL updateExporureModel(STExposureModel model){ if (currentExposureMode == model) return NO; currentExposureMode = model; return YES; } - (void)test:(AVCaptureExposureMode)model{ if ([self.videoDevice lockForConfiguration:nil]) { if ([self.videoDevice isExposureModeSupported:model]) { [self.videoDevice setExposureMode:model]; } [self.videoDevice unlockForConfiguration]; } } - (void)setExposureTime:(CMTime)time{ if ([self.videoDevice lockForConfiguration:nil]) { if (@available(iOS 12.0, *)) { self.videoDevice.activeMaxExposureDuration = time; } else { // Fallback on earlier versions } [self.videoDevice unlockForConfiguration]; } } - (void)setFPS:(float)fps{ if ([_videoDevice lockForConfiguration:NULL] == YES) { // _videoDevice.activeFormat = bestFormat; _videoDevice.activeVideoMinFrameDuration = CMTimeMake(1, fps); _videoDevice.activeVideoMaxFrameDuration = CMTimeMake(1, fps); [_videoDevice unlockForConfiguration]; } } - (void)updateExposure:(CMSampleBufferRef)sampleBuffer{ CFDictionaryRef metadataDict = CMCopyDictionaryOfAttachments(NULL,sampleBuffer, kCMAttachmentMode_ShouldPropagate); NSDictionary * metadata = [[NSMutableDictionary alloc] initWithDictionary:(__bridge NSDictionary *)metadataDict]; CFRelease(metadataDict); NSDictionary *exifMetadata = [[metadata objectForKey:(NSString *)kCGImagePropertyExifDictionary] mutableCopy]; float brightnessValue = [[exifMetadata objectForKey:(NSString *)kCGImagePropertyExifBrightnessValue] floatValue]; if(brightnessValue > 2 && updateExporureModel(STExposureModelPositive2)){ [self setISOValue:500 exposeDuration:30]; [self test:AVCaptureExposureModeContinuousAutoExposure]; [self setFPS:30]; }else if(brightnessValue > 1 && brightnessValue < 2 && updateExporureModel(STExposureModelPositive1)){ [self setISOValue:500 exposeDuration:30]; [self test:AVCaptureExposureModeContinuousAutoExposure]; [self setFPS:30]; }else if(brightnessValue > 0 && brightnessValue < 1 && updateExporureModel(STExposureModel0)){ [self setISOValue:500 exposeDuration:30]; [self test:AVCaptureExposureModeContinuousAutoExposure]; [self setFPS:30]; }else if (brightnessValue > -1 && brightnessValue < 0 && updateExporureModel(STExposureModelNegative1)){ [self setISOValue:self.videoDevice.activeFormat.maxISO - 200 exposeDuration:40]; [self test:AVCaptureExposureModeContinuousAutoExposure]; }else if (brightnessValue > -2 && brightnessValue < -1 && updateExporureModel(STExposureModelNegative2)){ [self setISOValue:self.videoDevice.activeFormat.maxISO - 200 exposeDuration:35]; [self test:AVCaptureExposureModeContinuousAutoExposure]; }else if (brightnessValue > -2.5 && brightnessValue < -2 && updateExporureModel(STExposureModelNegative3)){ [self setISOValue:self.videoDevice.activeFormat.maxISO - 200 exposeDuration:30]; [self test:AVCaptureExposureModeContinuousAutoExposure]; }else if (brightnessValue > -3 && brightnessValue < -2.5 && updateExporureModel(STExposureModelNegative4)){ [self setISOValue:self.videoDevice.activeFormat.maxISO - 200 exposeDuration:25]; [self test:AVCaptureExposureModeContinuousAutoExposure]; }else if (brightnessValue > -3.5 && brightnessValue < -3 && updateExporureModel(STExposureModelNegative5)){ [self setISOValue:self.videoDevice.activeFormat.maxISO - 200 exposeDuration:20]; [self test:AVCaptureExposureModeContinuousAutoExposure]; }else if (brightnessValue > -4 && brightnessValue < -3.5 && updateExporureModel(STExposureModelNegative6)){ [self setISOValue:self.videoDevice.activeFormat.maxISO - 250 exposeDuration:15]; [self test:AVCaptureExposureModeContinuousAutoExposure]; }else if (brightnessValue > -5 && brightnessValue < -4 && updateExporureModel(STExposureModelNegative7)){ [self setISOValue:self.videoDevice.activeFormat.maxISO - 200 exposeDuration:10]; [self test:AVCaptureExposureModeContinuousAutoExposure]; }else if(brightnessValue < -5 && updateExporureModel(STExposureModelNegative8)){ [self setISOValue:self.videoDevice.activeFormat.maxISO - 150 exposeDuration:5]; [self test:AVCaptureExposureModeContinuousAutoExposure]; } // NSLog(@"current brightness %f min iso %f max iso %f min exposure %f max exposure %f", brightnessValue, self.videoDevice.activeFormat.minISO, self.videoDevice.activeFormat.maxISO, CMTimeGetSeconds(self.videoDevice.activeFormat.minExposureDuration), CMTimeGetSeconds(self.videoDevice.activeFormat.maxExposureDuration)); } - (void)captureOutput:(AVCaptureOutput *)captureOutput didOutputSampleBuffer:(CMSampleBufferRef)sampleBuffer fromConnection:(AVCaptureConnection *)connection { if (!self.bSessionPause) { if (self.delegate && [self.delegate respondsToSelector:@selector(rtcCameraVideoCapturer:didOutputSampleBuffer:)]) { [self.delegate rtcCameraVideoCapturer:self didOutputSampleBuffer:sampleBuffer]; } // if (self.delegate && [self.delegate respondsToSelector:@selector(captureOutput:didOutputSampleBuffer:fromConnection:)]) { // //[connection setVideoOrientation:AVCaptureVideoOrientationPortrait]; // [self.delegate captureOutput:captureOutput didOutputSampleBuffer:sampleBuffer fromConnection:connection]; // } } // [self updateExposure:sampleBuffer]; } - (void)captureOutput:(AVCaptureOutput *)output didOutputMetadataObjects:(NSArray<__kindof AVMetadataObject *> *)metadataObjects fromConnection:(AVCaptureConnection *)connection{ AVMetadataFaceObject *faceObject = nil; for(AVMetadataObject *object in metadataObjects){ if (AVMetadataObjectTypeFace == object.type) { faceObject = (AVMetadataFaceObject*)object; } } static BOOL hasFace = NO; if (!hasFace && faceObject.faceID) { hasFace = YES; } if (!faceObject.faceID) { hasFace = NO; } } #pragma mark - Notifications - (void)addObservers { [[NSNotificationCenter defaultCenter] addObserver:self selector:@selector(appWillResignActive) name:UIApplicationWillResignActiveNotification object:nil]; [[NSNotificationCenter defaultCenter] addObserver:self selector:@selector(appDidBecomeActive) name:UIApplicationDidBecomeActiveNotification object:nil]; [[NSNotificationCenter defaultCenter] addObserver:self selector:@selector(dealCaptureSessionRuntimeError:) name:AVCaptureSessionRuntimeErrorNotification object:self.session]; } - (void)removeObservers { [[NSNotificationCenter defaultCenter] removeObserver:self]; } #pragma mark - Notification - (void)appWillResignActive { RTCLogInfo("SDCustomRTCCameraCapturer appWillResignActive"); } - (void)appDidBecomeActive { RTCLogInfo("SDCustomRTCCameraCapturer appDidBecomeActive"); [self startRunning]; } - (void)dealCaptureSessionRuntimeError:(NSNotification *)notification { NSError *error = [notification.userInfo objectForKey:AVCaptureSessionErrorKey]; RTCLogError(@"SDCustomRTCCameraCapturer dealCaptureSessionRuntimeError error: %@", error); if (error.code == AVErrorMediaServicesWereReset) { [self startRunning]; } } #pragma mark - Dealloc - (void)dealloc { DebugLog(@"SDCustomRTCCameraCapturer dealloc"); [self removeObservers]; if (self.session) { self.bSessionPause = YES; [self.session beginConfiguration]; [self.session removeOutput:self.dataOutput]; [self.session removeInput:self.deviceInput]; [self.session commitConfiguration]; if ([self.session isRunning]) { [self.session stopRunning]; } self.session = nil; } } @end- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

- 167

- 168

- 169

- 170

- 171

- 172

- 173

- 174

- 175

- 176

- 177

- 178

- 179

- 180

- 181

- 182

- 183

- 184

- 185

- 186

- 187

- 188

- 189

- 190

- 191

- 192

- 193

- 194

- 195

- 196

- 197

- 198

- 199

- 200

- 201

- 202

- 203

- 204

- 205

- 206

- 207

- 208

- 209

- 210

- 211

- 212

- 213

- 214

- 215

- 216

- 217

- 218

- 219

- 220

- 221

- 222

- 223

- 224

- 225

- 226

- 227

- 228

- 229

- 230

- 231

- 232

- 233

- 234

- 235

- 236

- 237

- 238

- 239

- 240

- 241

- 242

- 243

- 244

- 245

- 246

- 247

- 248

- 249

- 250

- 251

- 252

- 253

- 254

- 255

- 256

- 257

- 258

- 259

- 260

- 261

- 262

- 263

- 264

- 265

- 266

- 267

- 268

- 269

- 270

- 271

- 272

- 273

- 274

- 275

- 276

- 277

- 278

- 279

- 280

- 281

- 282

- 283

- 284

- 285

- 286

- 287

- 288

- 289

- 290

- 291

- 292

- 293

- 294

- 295

- 296

- 297

- 298

- 299

- 300

- 301

- 302

- 303

- 304

- 305

- 306

- 307

- 308

- 309

- 310

- 311

- 312

- 313

- 314

- 315

- 316

- 317

- 318

- 319

- 320

- 321

- 322

- 323

- 324

- 325

- 326

- 327

- 328

- 329

- 330

- 331

- 332

- 333

- 334

- 335

- 336

- 337

- 338

- 339

- 340

- 341

- 342

- 343

- 344

- 345

- 346

- 347

- 348

- 349

- 350

- 351

- 352

- 353

- 354

- 355

- 356

- 357

- 358

- 359

- 360

- 361

- 362

- 363

- 364

- 365

- 366

- 367

- 368

- 369

- 370

- 371

- 372

- 373

- 374

- 375

- 376

- 377

- 378

- 379

- 380

- 381

- 382

- 383

- 384

- 385

- 386

- 387

- 388

- 389

- 390

- 391

- 392

- 393

- 394

- 395

- 396

- 397

- 398

- 399

- 400

- 401

- 402

- 403

- 404

- 405

- 406

- 407

- 408

- 409

- 410

- 411

- 412

- 413

- 414

- 415

- 416

- 417

- 418

- 419

- 420

- 421

- 422

- 423

- 424

- 425

- 426

- 427

- 428

- 429

- 430

- 431

- 432

- 433

- 434

- 435

- 436

- 437

- 438

- 439

- 440

- 441

- 442

- 443

- 444

- 445

- 446

- 447

- 448

- 449

- 450

- 451

- 452

- 453

- 454

- 455

- 456

- 457

- 458

- 459

- 460

- 461

- 462

- 463

- 464

- 465

- 466

- 467

- 468

- 469

- 470

- 471

- 472

- 473

- 474

- 475

- 476

- 477

- 478

- 479

- 480

- 481

- 482

- 483

- 484

- 485

- 486

- 487

- 488

- 489

- 490

- 491

- 492

- 493

- 494

- 495

- 496

- 497

- 498

- 499

- 500

- 501

- 502

- 503

- 504

- 505

- 506

- 507

- 508

- 509

- 510

- 511

- 512

- 513

- 514

- 515

- 516

- 517

- 518

- 519

- 520

- 521

- 522

- 523

- 524

- 525

- 526

- 527

- 528

- 529

- 530

- 531

- 532

- 533

- 534

- 535

- 536

- 537

- 538

- 539

- 540

- 541

- 542

- 543

- 544

- 545

- 546

- 547

- 548

- 549

- 550

- 551

- 552

- 553

- 554

- 555

- 556

- 557

- 558

- 559

- 560

- 561

- 562

- 563

- 564

- 565

- 566

- 567

- 568

- 569

- 570

- 571

- 572

- 573

- 574

- 575

- 576

- 577

- 578

- 579

- 580

- 581

- 582

- 583

- 584

- 585

- 586

- 587

- 588

- 589

- 590

- 591

- 592

- 593

- 594

- 595

- 596

- 597

- 598

- 599

- 600

- 601

- 602

- 603

- 604

- 605

- 606

- 607

- 608

- 609

- 610

- 611

- 612

- 613

- 614

- 615

- 616

- 617

- 618

- 619

- 620

- 621

- 622

- 623

- 624

- 625

- 626

- 627

- 628

- 629

- 630

- 631

- 632

- 633

- 634

- 635

- 636

- 637

- 638

- 639

- 640

- 641

- 642

- 643

- 644

- 645

- 646

- 647

- 648

- 649

- 650

- 651

- 652

- 653

- 654

- 655

- 656

- 657

- 658

- 659

- 660

- 661

- 662

- 663

- 664

- 665

- 666

- 667

- 668

- 669

- 670

- 671

- 672

- 673

- 674

- 675

- 676

- 677

- 678

- 679

- 680

- 681

- 682

- 683

- 684

- 685

- 686

- 687

- 688

- 689

- 690

- 691

- 692

- 693

- 694

- 695

- 696

- 697

- 698

- 699

- 700

- 701

- 702

- 703

- 704

- 705

- 706

- 707

- 708

- 709

- 710

- 711

- 712

- 713

- 714

- 715

- 716

- 717

- 718

- 719

- 720

- 721

- 722

- 723

- 724

- 725

- 726

- 727

- 728

- 729

- 730

- 731

- 732

- 733

- 734

- 735

- 736

- 737

- 738

- 739

- 740

- 741

- 742

- 743

- 744

- 745

- 746

- 747

- 748

- 749

- 750

- 751

- 752

- 753

- 754

- 755

- 756

- 757

- 758

- 759

- 760

- 761

- 762

- 763

- 764

- 765

- 766

- 767

- 768

- 769

- 770

- 771

- 772

- 773

- 774

- 775

- 776

- 777

- 778

- 779

- 780

- 781

- 782

- 783

- 784

- 785

- 786

- 787

- 788

- 789

- 790

- 791

- 792

- 793

- 794

- 795

- 796

- 797

- 798

- 799

- 800

- 801

- 802

- 803

- 804

- 805

- 806

- 807

- 808

- 809

- 810

- 811

- 812

- 813

- 814

- 815

- 816

- 817

- 818

- 819

- 820

- 821

- 822

- 823

- 824

- 825

- 826

- 827

- 828

- 829

- 830

- 831

- 832

- 833

- 834

- 835

- 836

- 837

- 838

- 839

- 840

- 841

- 842

- 843

- 844

- 845

- 846

- 847

- 848

- 849

- 850

- 851

- 852

- 853

- 854

- 855

- 856

- 857

- 858

- 859

- 860

- 861

- 862

- 863

- 864

- 865

- 866

- 867

- 868

- 869

- 870

- 871

- 872

- 873

- 874

- 875

- 876

- 877

- 878

- 879

- 880

- 881

- 882

- 883

- 884

- 885

- 886

- 887

- 888

- 889

- 890

- 891

- 892

- 893

- 894

- 895

- 896

- 897

- 898

- 899

- 900

- 901

- 902

- 903

- 904

- 905

- 906

- 907

- 908

- 909

- 910

- 911

- 912

- 913

- 914

- 915

- 916

- 917

- 918

- 919

- 920

- 921

- 922

- 923

- 924

- 925

- 926

- 927

- 928

- 929

- 930

- 931

- 932

- 933

- 934

- 935

- 936

- 937

- 938

- 939

- 940

- 941

- 942

- 943

- 944

- 945

- 946

- 947

- 948

- 949

- 950

- 951

- 952

- 953

- 954

- 955

- 956

- 957

- 958

- 959

- 960

- 961

- 962

- 963

- 964

- 965

- 966

- 967

- 968

- 969

- 970

- 971

- 972

- 973

- 974

- 975

- 976

- 977

- 978

- 979

- 980

- 981

- 982

- 983

- 984

- 985

- 986

- 987

- 988

- 989

- 990

- 991

- 992

- 993

- 994

- 995

- 996

- 997

- 998

- 999

- 1000

- 1001

- 1002

- 1003

- 1004

- 1005

- 1006

- 1007

- 1008

- 1009

- 1010

- 1011

- 1012

- 1013

- 1014

- 1015

- 1016

- 1017

- 1018

- 1019

- 1020

- 1021

- 1022

- 1023

- 1024

- 1025

- 1026

- 1027

- 1028

- 1029

- 1030

- 1031

- 1032

- 1033

- 1034

- 1035

- 1036

- 1037

- 1038

- 1039

- 1040

- 1041

- 1042

- 1043

- 1044

- 1045

- 1046

- 1047

- 1048

- 1049

- 1050

- 1051

- 1052

- 1053

- 1054

- 1055

- 1056

- 1057

- 1058

- 1059

- 1060

- 1061

- 1062

- 1063

- 1064

- 1065

- 1066

- 1067

- 1068

- 1069

- 1070

- 1071

- 1072

- 1073

- 1074

- 1075

- 1076

- 1077

- 1078

- 1079

- 1080

- 1081

- 1082

- 1083

- 1084

- 1085

- 1086

- 1087

- 1088

- 1089

- 1090

- 1091

- 1092

- 1093

- 1094

- 1095

- 1096

- 1097

- 1098

- 1099

- 1100

- 1101

- 1102

- 1103

- 1104

- 1105

- 1106

- 1107

- 1108

- 1109

- 1110

- 1111

- 1112

- 1113

- 1114

- 1115

- 1116

- 1117

- 1118

- 1119

- 1120

- 1121

- 1122

- 1123

- 1124

- 1125

- 1126

- 1127

- 1128

- 1129

- 1130

- 1131

- 1132

- 1133

- 1134

- 1135

- 1136

- 1137

- 1138

- 1139

- 1140

- 1141

- 1142

- 1143

- 1144

- 1145

- 1146

- 1147

- 1148

- 1149

- 1150

- 1151

- 1152

- 1153

- 1154

- 1155

- 1156

- 1157

- 1158

- 1159

- 1160

- 1161

- 1162

- 1163

- 1164

- 1165

- 1166

- 1167

- 1168

- 1169

- 1170

- 1171

- 1172

- 1173

- 1174

- 1175

- 1176

- 1177

- 1178

- 1179

- 1180

- 1181

- 1182

- 1183

- 1184

- 1185

- 1186

- 1187

- 1188

- 1189

- 1190

- 1191

- 1192

- 1193

- 1194

- 1195

三、实现WebRTC结合ossrs视频通话功能

iOS端WebRTC调用ossrs相关,实现直播视频通话功能请查看:

https://blog.csdn.net/gloryFlow/article/details/132262724这里列出来需要改动的地方

在createVideoTrack中使用SDCustomRTCCameraCapturer类

- (RTCVideoTrack *)createVideoTrack { RTCVideoSource *videoSource = [self.factory videoSource]; self.localVideoSource = videoSource; // 如果是模拟器 if (TARGET_IPHONE_SIMULATOR) { if (@available(iOS 10, *)) { self.videoCapturer = [[RTCFileVideoCapturer alloc] initWithDelegate:self]; } else { // Fallback on earlier versions } } else{ self.videoCapturer = [[SDCustomRTCCameraCapturer alloc] initWithDevicePosition:AVCaptureDevicePositionFront sessionPresset:AVCaptureSessionPreset1920x1080 fps:20 needYuvOutput:NO]; // self.videoCapturer = [[SDCustomRTCCameraCapturer alloc] initWithDelegate:self]; } RTCVideoTrack *videoTrack = [self.factory videoTrackWithSource:videoSource trackId:@"video0"]; return videoTrack; }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

当需要渲染到界面上的时候,需要设置startCaptureLocalVideo,为 localVideoTrack添加渲染的界面View,renderer是一个RTCMTLVideoView

self.localRenderer = [[RTCMTLVideoView alloc] initWithFrame:CGRectZero]; self.localRenderer.delegate = self;- 1

- 2

- (void)startCaptureLocalVideo:(id<RTCVideoRenderer>)renderer { if (!self.isPublish) { return; } if (!renderer) { return; } if (!self.videoCapturer) { return; } [self setDegradationPreference:RTCDegradationPreferenceMaintainResolution]; RTCVideoCapturer *capturer = self.videoCapturer; if ([capturer isKindOfClass:[SDCustomRTCCameraCapturer class]]) { AVCaptureDevice *camera = [self findDeviceForPosition:self.usingFrontCamera?AVCaptureDevicePositionFront:AVCaptureDevicePositionBack]; SDCustomRTCCameraCapturer *cameraVideoCapturer = (SDCustomRTCCameraCapturer *)capturer; [cameraVideoCapturer setISOValue:0.0]; [cameraVideoCapturer rotateCamera:self.usingFrontCamera]; self.videoCapturer.delegate = self; AVCaptureDeviceFormat *formatNilable = [self selectFormatForDevice:camera];; if (!formatNilable) { return; } DebugLog(@"formatNilable:%@", formatNilable); NSInteger fps = [self selectFpsForFormat:formatNilable]; CMVideoDimensions videoVideoDimensions = CMVideoFormatDescriptionGetDimensions(formatNilable.formatDescription); float width = videoVideoDimensions.width; float height = videoVideoDimensions.height; DebugLog(@"videoVideoDimensions width:%f,height:%f", width, height); [cameraVideoCapturer startRunning]; // [cameraVideoCapturer startCaptureWithDevice:camera format:formatNilable fps:fps completionHandler:^(NSError *error) { // DebugLog(@"startCaptureWithDevice error:%@", error); // }]; [self changeResolution:width height:height fps:(int)fps]; } if (@available(iOS 10, *)) { if ([capturer isKindOfClass:[RTCFileVideoCapturer class]]) { RTCFileVideoCapturer *fileVideoCapturer = (RTCFileVideoCapturer *)capturer; [fileVideoCapturer startCapturingFromFileNamed:@"beautyPicture.mp4" onError:^(NSError * _Nonnull error) { DebugLog(@"startCaptureLocalVideo startCapturingFromFileNamed error:%@", error); }]; } } else { // Fallback on earlier versions } [self.localVideoTrack addRenderer:renderer]; }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

获得相机采集的画面- (void)rtcCameraVideoCapturer:(RTCVideoCapturer *)capturer didOutputSampleBuffer:(CMSampleBufferRef)sampleBuffer;

具体代码如下

- (void)rtcCameraVideoCapturer:(RTCVideoCapturer *)capturer didOutputSampleBuffer:(CMSampleBufferRef)sampleBuffer { CVPixelBufferRef pixelBuffer = CMSampleBufferGetImageBuffer(sampleBuffer); RTCCVPixelBuffer *rtcPixelBuffer = [[RTCCVPixelBuffer alloc] initWithPixelBuffer:videoPixelBufferRef]; RTCCVPixelBuffer *rtcPixelBuffer = [[RTCCVPixelBuffer alloc] initWithPixelBuffer:pixelBuffer]; int64_t timeStampNs = CMTimeGetSeconds(CMSampleBufferGetPresentationTimeStamp(sampleBuffer)) * 1000000000; RTCVideoFrame *rtcVideoFrame = [[RTCVideoFrame alloc] initWithBuffer:rtcPixelBuffer [self.localVideoSource capturer:capturer didCaptureVideoFrame:rtcVideoFrame]; }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

WebRTCClient完整代码如下

WebRTCClient.h

#import <Foundation/Foundation.h> #import <WebRTC/WebRTC.h> #import <UIKit/UIKit.h> #import "SDCustomRTCCameraCapturer.h" #define kSelectedResolution @"kSelectedResolutionIndex" #define kFramerateLimit 30.0 @protocol WebRTCClientDelegate; @interface WebRTCClient : NSObject @property (nonatomic, weak) id<WebRTCClientDelegate> delegate; /** connect工厂 */ @property (nonatomic, strong) RTCPeerConnectionFactory *factory; /** 是否push */ @property (nonatomic, assign) BOOL isPublish; /** connect */ @property (nonatomic, strong) RTCPeerConnection *peerConnection; /** RTCAudioSession */ @property (nonatomic, strong) RTCAudioSession *rtcAudioSession; /** DispatchQueue */ @property (nonatomic) dispatch_queue_t audioQueue; /** mediaConstrains */ @property (nonatomic, strong) NSDictionary *mediaConstrains; /** publishMediaConstrains */ @property (nonatomic, strong) NSDictionary *publishMediaConstrains; /** playMediaConstrains */ @property (nonatomic, strong) NSDictionary *playMediaConstrains; /** optionalConstraints */ @property (nonatomic, strong) NSDictionary *optionalConstraints; /** RTCVideoCapturer摄像头采集器 */ @property (nonatomic, strong) RTCVideoCapturer *videoCapturer; /** local语音localAudioTrack */ @property (nonatomic, strong) RTCAudioTrack *localAudioTrack; /** localVideoTrack */ @property (nonatomic, strong) RTCVideoTrack *localVideoTrack; /** remoteVideoTrack */ @property (nonatomic, strong) RTCVideoTrack *remoteVideoTrack; /** RTCVideoRenderer */ @property (nonatomic, weak) id<RTCVideoRenderer> remoteRenderView; /** localDataChannel */ @property (nonatomic, strong) RTCDataChannel *localDataChannel; /** localDataChannel */ @property (nonatomic, strong) RTCDataChannel *remoteDataChannel; /** RTCVideoSource */ @property (nonatomic, strong) RTCVideoSource *localVideoSource; - (instancetype)initWithPublish:(BOOL)isPublish; - (void)startCaptureLocalVideo:(id<RTCVideoRenderer>)renderer; - (void)addIceCandidate:(RTCIceCandidate *)candidate; - (void)answer:(void (^)(RTCSessionDescription *sdp))completionHandler; - (void)offer:(void (^)(RTCSessionDescription *sdp))completionHandler; - (void)setRemoteSdp:(RTCSessionDescription *)remoteSdp completion:(void (^)(NSError *error))completion; - (void)setRemoteCandidate:(RTCIceCandidate *)remoteCandidate; - (BOOL)changeResolution:(int)width height:(int)height fps:(int)fps; - (NSArray<NSString *> *)availableVideoResolutions; #pragma mark - switchCamera - (void)switchCamera:(id<RTCVideoRenderer>)renderer; #pragma mark - Hiden or show Video - (void)hidenVideo; - (void)showVideo; #pragma mark - Hiden or show Audio - (void)muteAudio; - (void)unmuteAudio; - (void)speakOff; - (void)speakOn; #pragma mark - 设置视频码率BitrateBps - (void)setMaxBitrate:(int)maxBitrate; - (void)setMinBitrate:(int)minBitrate; #pragma mark - 设置视频帧率 - (void)setMaxFramerate:(int)maxFramerate; - (void)close; @end @protocol WebRTCClientDelegate <NSObject> // 处理美颜设置 - (RTCVideoFrame *)webRTCClient:(WebRTCClient *)client didCaptureSampleBuffer:(CMSampleBufferRef)sampleBuffer; - (void)webRTCClient:(WebRTCClient *)client didDiscoverLocalCandidate:(RTCIceCandidate *)candidate; - (void)webRTCClient:(WebRTCClient *)client didChangeConnectionState:(RTCIceConnectionState)state; - (void)webRTCClient:(WebRTCClient *)client didReceiveData:(NSData *)data; @end- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

WebRTCClient.m