-

【云原生】Sqoop on k8s 讲解与实战操作

一、概述

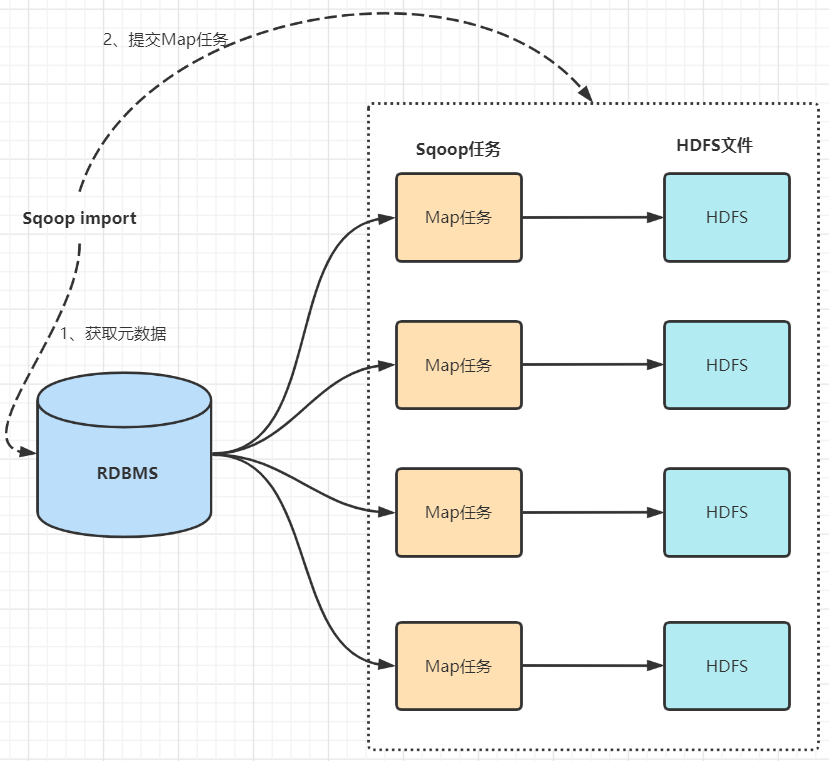

Sqoop是一个在结构化数据和Hadoop之间进行批量数据迁移的工具,结构化数据可以是MySQL、Oracle等RDBMS。Sqoop底层用MapReduce程序实现抽取、转换、加载,MapReduce天生的特性保证了并行化和高容错率,而且相比Kettle等传统ETL工具,任务跑在Hadoop集群上,减少了ETL服务器资源的使用情况。在特定场景下,抽取过程会有很大的性能提升。

Sqoop 架构:

-

想了解更多Sqoop也可参考我这篇文章:大数据Hadoop之——数据同步工具Sqoop

-

这里的hadoop环境部署可以参考我这篇文章:【云原生】Hadoop HA on k8s 环境部署

-

mysql环境部署可以参考我这篇文章:【云原生】MySQL on k8s 环境部署

二、开始编排部署

1)下载 Sqoop 部署包

下载地址:http://archive.apache.org/dist/sqoop/

wget http://archive.apache.org/dist/sqoop/1.99.7/sqoop-1.99.7-bin-hadoop200.tar.gz tar -xf sqoop-1.99.7-bin-hadoop200.tar.gz- 1

- 2

2)构建镜像

DockerfileFROM myharbor.com/bigdata/centos:7.9.2009 RUN rm -f /etc/localtime && ln -sv /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && echo "Asia/Shanghai" > /etc/timezone ENV LANG=zh_CN.UTF-8 RUN groupadd --system --gid=10000 admin && useradd --system --home-dir /home/admin --uid=10000 --gid=admin -m admin ### install tools RUN yum install -y vim tar wget curl less telnet net-tools lsof mysql RUN mkdir -p /opt/apache COPY docker-entrypoint.sh /opt/apache/ RUN chmod +x /opt/apache/docker-entrypoint.sh ### JDK ADD jdk-8u212-linux-x64.tar.gz /opt/apache/ ENV JAVA_HOME /opt/apache/jdk1.8.0_212 ENV PATH=$JAVA_HOME/bin:$PATH ### Sqoop ADD sqoop-1.99.7-bin-hadoop200.tar.gz /opt/apache/ # 配置环境变量 ENV SQOOP_HOME=/opt/apache/sqoop ENV PATH=$SQOOP_HOME/bin:$PATH ENV SQOOP_SERVER_EXTRA_LIB=$SQOOP_HOME/lib RUN ln -s /opt/apache/sqoop-1.99.7-bin-hadoop200 $SQOOP_HOME ENV PATH=$SQOOP_HOME/bin:$PATH # sqoop hadoop环境配置 ADD hadoop-3.3.2.tar.gz /opt/apache/ ENV HADOOP_HOME=/opt/apache/hadoop RUN ln -s /opt/apache/hadoop-3.3.2 $HADOOP_HOME ENV HADOOP_CONF_DIR=${HADOOP_HOME}/etc/hadoop ENV PATH=${HADOOP_HOME}/bin:$PATH # mysql JDBC driver # wget https://repo1.maven.org/maven2/mysql/mysql-connector-java/8.0.30/mysql-connector-java-8.0.30.jar COPY mysql-connector-java-8.0.30.jar $SQOOP_HOME/lib/ # chown RUN chown -R admin:admin /opt/apache #设置的工作目录 WORKDIR $SQOOP_HOME # 执行脚本,构建镜像时不执行,运行实例才会执行 ENTRYPOINT ["/opt/apache/docker-entrypoint.sh"]- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

开始构建镜像

docker build -t myharbor.com/bigdata/sqoop:sqoop-1.99.7-bin-hadoop200 . --no-cache # 上传镜像 docker push myharbor.com/bigdata/sqoop:sqoop-1.99.7-bin-hadoop200 # 删除镜像 docker rmi myharbor.com/bigdata/sqoop:sqoop-1.99.7-bin-hadoop200 crictl rmi myharbor.com/bigdata/sqoop:sqoop-1.99.7-bin-hadoop200- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

3)创建sqoop chart模板

helm create sqoop- 1

4)修改yaml编排

sqoop/values.yaml

image: repository: myharbor.com/bigdata/sqoop pullPolicy: IfNotPresent # Overrides the image tag whose default is the chart appVersion. tag: "sqoop-1.99.7-bin-hadoop200" securityContext: runAsUser: 10000 runAsGroup: 10000 privileged: true service: type: ClusterIP port: 12000- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

sqoop/templates/configmap.yaml

apiVersion: v1 kind: ConfigMap metadata: name: {{ include "sqoop.fullname" . }} labels: {{- include "sqoop.labels" . | nindent 4 }} data: sqoop.properties: |- # Sqoop configuration. # org.apache.sqoop.log4j.debug=false org.apache.sqoop.log4j.rootLogger=INFO, file org.apache.sqoop.log4j.category.org.apache.sqoop=INFO org.apache.sqoop.log4j.appender.file=org.apache.log4j.RollingFileAppender org.apache.sqoop.log4j.appender.file.File=@LOGDIR@/sqoop.log org.apache.sqoop.log4j.appender.file.MaxFileSize=25MB org.apache.sqoop.log4j.appender.file.MaxBackupIndex=5 org.apache.sqoop.log4j.appender.file.layout=org.apache.log4j.PatternLayout org.apache.sqoop.log4j.appender.file.layout.ConversionPattern=%d{ISO8601} %-5p [%l] %m%n # Audit logger for default configuration of FileAuditLogger org.apache.sqoop.log4j.logger.audit=INFO, audit org.apache.sqoop.log4j.appender.audit=org.apache.log4j.RollingFileAppender org.apache.sqoop.log4j.appender.audit.File=@LOGDIR@/audit.log org.apache.sqoop.log4j.appender.audit.MaxFileSize=25MB org.apache.sqoop.log4j.appender.audit.MaxBackupIndex=5 org.apache.sqoop.log4j.appender.audit.layout=org.apache.log4j.PatternLayout org.apache.sqoop.log4j.appender.audit.layout.ConversionPattern=%d{ISO8601} %-5p [%l] %m%n # # Audit Loggers Configuration # Multiple audit loggers could be given here. To specify an # audit logger, you should at least add org.apache.sqoop. # auditlogger.[LoggerName].class. You could also provide # more configuration options by using org.apache.sqoop. # auditlogger.[LoggerName] prefix, then all these options # are parsed to the logger class. # org.apache.sqoop.auditlogger.default.class=org.apache.sqoop.audit.FileAuditLogger org.apache.sqoop.auditlogger.default.logger=audit # # Repository configuration # The Repository subsystem provides the special prefix which # is "org.apache.sqoop.repository.sysprop". Any property that # is specified with this prefix is parsed out and set as a # system property. For example, if the built in Derby repository # is being used, the sysprop prefixed properties can be used # to affect Derby configuration at startup time by setting # the appropriate system properties. # # Repository provider org.apache.sqoop.repository.provider=org.apache.sqoop.repository.JdbcRepositoryProvider # Repository upgrade # If set to true, it will not upgrade the sqoop respository schema, by default it will iniate the upgrade on server start-up org.apache.sqoop.repository.schema.immutable=false # JDBC repository provider configuration org.apache.sqoop.repository.jdbc.handler=org.apache.sqoop.repository.derby.DerbyRepositoryHandler org.apache.sqoop.repository.jdbc.transaction.isolation=READ_COMMITTED org.apache.sqoop.repository.jdbc.maximum.connections=10 org.apache.sqoop.repository.jdbc.url=jdbc:derby:@BASEDIR@/repository/db;create=true org.apache.sqoop.repository.jdbc.driver=org.apache.derby.jdbc.EmbeddedDriver org.apache.sqoop.repository.jdbc.user=sa org.apache.sqoop.repository.jdbc.password= # System properties for embedded Derby configuration org.apache.sqoop.repository.sysprop.derby.stream.error.file=@LOGDIR@/derbyrepo.log # # Sqoop Connector configuration # If set to true will initiate Connectors config upgrade during server startup # org.apache.sqoop.connector.autoupgrade=false # # Sqoop Driver configuration # If set to true will initiate the Driver config upgrade during server startup # org.apache.sqoop.driver.autoupgrade=false # Sleeping period for reloading configuration file (once a minute) org.apache.sqoop.core.configuration.provider.properties.sleep=60000 # # Submission engine configuration # # Submission engine class org.apache.sqoop.submission.engine=org.apache.sqoop.submission.mapreduce.MapreduceSubmissionEngine # Number of milliseconds, submissions created before this limit will be removed, default is one day #org.apache.sqoop.submission.purge.threshold= # Number of milliseconds for purge thread to sleep, by default one day #org.apache.sqoop.submission.purge.sleep= # Number of milliseconds for update thread to sleep, by default 5 minutes #org.apache.sqoop.submission.update.sleep= # Hadoop configuration directory org.apache.sqoop.submission.engine.mapreduce.configuration.directory=/opt/apache/hadoop/etc/hadoop/ # Log level for Sqoop Mapper/Reducer org.apache.sqoop.submission.engine.mapreduce.configuration.loglevel=INFO # # Execution engine configuration # org.apache.sqoop.execution.engine=org.apache.sqoop.execution.mapreduce.MapreduceExecutionEngine # # Authentication configuration # #org.apache.sqoop.security.authentication.type=SIMPLE #org.apache.sqoop.security.authentication.handler=org.apache.sqoop.security.authentication.SimpleAuthenticationHandler #org.apache.sqoop.security.authentication.anonymous=true #org.apache.sqoop.security.authentication.type=KERBEROS #org.apache.sqoop.security.authentication.handler=org.apache.sqoop.security.authentication.KerberosAuthenticationHandler #org.apache.sqoop.security.authentication.kerberos.principal=sqoop/_HOST@NOVALOCAL #org.apache.sqoop.security.authentication.kerberos.keytab=/home/kerberos/sqoop.keytab #org.apache.sqoop.security.authentication.kerberos.http.principal=HTTP/_HOST@NOVALOCAL #org.apache.sqoop.security.authentication.kerberos.http.keytab=/home/kerberos/sqoop.keytab #org.apache.sqoop.security.authentication.enable.doAs=true #org.apache.sqoop.security.authentication.proxyuser.#USER#.users=* #org.apache.sqoop.security.authentication.proxyuser.#USER#.groups=* #org.apache.sqoop.security.authentication.proxyuser.#USER#.hosts=* # Default user, default value is "sqoop.anonymous.user" #org.apache.sqoop.security.authentication.default.user= # # Authorization configuration # #org.apache.sqoop.security.authorization.handler=org.apache.sqoop.security.authorization.DefaultAuthorizationHandler #org.apache.sqoop.security.authorization.access_controller=org.apache.sqoop.security.authorization.DefaultAuthorizationAccessController #org.apache.sqoop.security.authorization.validator=org.apache.sqoop.security.authorization.DefaultAuthorizationValidator #org.apache.sqoop.security.authorization.authentication_provider=org.apache.sqoop.security.authorization.DefaultAuthenticationProvider #org.apache.sqoop.security.authorization.server_name=SqoopServer1 # # SSL/TLS configuration # #org.apache.sqoop.security.tls.enabled=false #org.apache.sqoop.security.tls.protocol="TLSv1.2" #org.apache.sqoop.security.tls.keystore= #org.apache.sqoop.security.tls.keystore_password= # # Repository Encryption # #org.apache.sqoop.security.repo_encryption.enabled=true #org.apache.sqoop.security.repo_encryption.password= #org.apache.sqoop.security.repo_encryption.password_generator= #org.apache.sqoop.security.repo_encryption.hmac_algorithm=HmacSHA256 #org.apache.sqoop.security.repo_encryption.cipher_algorithm=AES #org.apache.sqoop.security.repo_encryption.cipher_key_size=16 #org.apache.sqoop.security.repo_encryption.cipher_spec=AES/CBC/PKCS5Padding #org.apache.sqoop.security.repo_encryption.initialization_vector_size=16 #org.apache.sqoop.security.repo_encryption.pbkdf2_algorithm=PBKDF2WithHmacSHA1 #org.apache.sqoop.security.repo_encryption.pbkdf2_rounds=4000 # External connectors load path # "/path/to/external/connectors/": Add all the connector JARs in the specified folder # org.apache.sqoop.connector.external.loadpath= # Sqoop application classpath # ":" separated list of jars to be included in sqoop. # org.apache.sqoop.classpath.extra= # Sqoop extra classpath to be included with all jobs # ":" separated list of jars to be included in map job classpath. # org.apache.sqoop.classpath.job= # # Jetty Server configuration # #org.apache.sqoop.jetty.thread.pool.worker.max=500 #org.apache.sqoop.jetty.thread.pool.worker.min=5 #org.apache.sqoop.jetty.thread.pool.worker.alive.time=60 org.apache.sqoop.jetty.port={{ .Values.service.port }} # Blacklisted Connectors # ":" separated list of connector names as specified in their # sqoopconnector.properties file org.apache.sqoop.connector.blacklist=- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

- 112

- 113

- 114

- 115

- 116

- 117

- 118

- 119

- 120

- 121

- 122

- 123

- 124

- 125

- 126

- 127

- 128

- 129

- 130

- 131

- 132

- 133

- 134

- 135

- 136

- 137

- 138

- 139

- 140

- 141

- 142

- 143

- 144

- 145

- 146

- 147

- 148

- 149

- 150

- 151

- 152

- 153

- 154

- 155

- 156

- 157

- 158

- 159

- 160

- 161

- 162

- 163

- 164

- 165

- 166

- 167

- 168

- 169

- 170

- 171

- 172

- 173

- 174

- 175

- 176

- 177

- 178

- 179

- 180

- 181

- 182

- 183

- 184

- 185

- 186

- 187

- 188

- 189

- 190

- 191

- 192

sqoop/templates/statefulset.yaml

apiVersion: apps/v1 kind: StatefulSet metadata: name: {{ include "sqoop.fullname" . }} labels: {{- include "sqoop.labels" . | nindent 4 }} spec: serviceName: {{ include "sqoop.fullname" . }} {{- if not .Values.autoscaling.enabled }} replicas: {{ .Values.replicaCount }} {{- end }} selector: matchLabels: {{- include "sqoop.selectorLabels" . | nindent 6 }} template: metadata: {{- with .Values.podAnnotations }} annotations: {{- toYaml . | nindent 8 }} {{- end }} labels: {{- include "sqoop.selectorLabels" . | nindent 8 }} spec: {{- with .Values.imagePullSecrets }} imagePullSecrets: {{- toYaml . | nindent 8 }} {{- end }} serviceAccountName: {{ include "sqoop.serviceAccountName" . }} securityContext: {{- toYaml .Values.podSecurityContext | nindent 8 }} containers: - name: {{ .Chart.Name }} securityContext: {{- toYaml .Values.securityContext | nindent 12 }} image: "{{ .Values.image.repository }}:{{ .Values.image.tag | default .Chart.AppVersion }}" imagePullPolicy: {{ .Values.image.pullPolicy }} ports: - name: sqoop containerPort: {{ .Values.service.port }} protocol: TCP volumeMounts: - name: {{ .Release.Name }}-conf mountPath: /opt/apache/sqoop/conf/sqoop.properties subPath: sqoop.properties livenessProbe: tcpSocket: port: sqoop readinessProbe: tcpSocket: port: sqoop resources: {{- toYaml .Values.resources | nindent 12 }} {{- with .Values.nodeSelector }} nodeSelector: {{- toYaml . | nindent 8 }} {{- end }} {{- with .Values.affinity }} affinity: {{- toYaml . | nindent 8 }} {{- end }} {{- with .Values.tolerations }} tolerations: {{- toYaml . | nindent 8 }} {{- end }} volumes: - name: {{ .Release.Name }}-conf configMap: name: {{ template "sqoop.fullname" . }}- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

5)开始部署

helm install sqoop ./sqoop -n sqoop --create-namespace kubectl get pods,svc -n sqoop -owide- 1

- 2

6)测试验证

1、数据从MYSQL导入到HDFS(Import)

【1】 创建JDBC连接

$ kubectl exec -it `kubectl get pods -n sqoop|awk 'NR>1{print $1}'|head -1` -n sqoop -- sqoop2-shell sqoop:000> set server --host sqoop-0.sqoop.sqoop --port 12000 --webapp sqoop #sqoop:000> set server --url http://sqoop-0.sqoop.sqoop:12000/sqoop # 先查看connector sqoop:000> show connector # 创建mysql连接 sqoop:000> create link -connector generic-jdbc-connector Creating link for connector with name generic-jdbc-connector Please fill following values to create new link object Name: mysql-jdbc-link Database connection Driver class: com.mysql.cj.jdbc.Driver Connection String: jdbc:mysql://mysql-primary.mysql:3306/sqoop?characterEncoding=utf8&useSSL=false&serverTimezone=UTC&rewriteBatchedStatements=true Username: root Password: ****** #MGSwjTorGj Fetch Size: Connection Properties: There are currently 0 values in the map: entry# SQL Dialect Identifier enclose: New link was successfully created with validation status OK and name mysql-jdbc-link sqoop:000> sqoop:000> show link # 删除 # sqoop:000> delete link --name mysql-jdbc-link- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

【2】创建HDFS连接

sqoop:000> create link -connector hdfs-connector Creating link for connector with name hdfs-connector Please fill following values to create new link object Name: hdfs-link HDFS cluster URI: hdfs://myhdfs #URI: hdfs://hadoop-ha-hadoop-hdfs-nn-0.hadoop-ha-hadoop-hdfs-nn.hadoop-ha.svc.cluster.local:8082 Conf directory: /opt/apache/hadoop/etc/hadoop Additional configs:: There are currently 0 values in the map: entry# New link was successfully created with validation status OK and name hdfs-link sqoop:000> show link- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

【3】创建Job任务

首先先创建HDFS存储目录

hadoop fs -mkdir -p /user/admin/sqoop/output/- 1

再执行数据转换

$ kubectl exec -it `kubectl get pods -n sqoop|awk 'NR>1{print $1}'|head -1` -n sqoop -- sqoop2-shell sqoop:000> set server --url http://sqoop-0.sqoop.sqoop:12000/sqoop sqoop:000> create job -f "mysql-jdbc-link" -t "hdfs-link" Creating job for links with from name mysql-jdbc-link and to name hdfs-link Please fill following values to create new job object Name: mysql2hdfs Database source Schema name: sqoop Table name: test1 SQL statement: Column names: There are currently 0 values in the list: element# Partition column: Partition column nullable: Boundary query: Incremental read Check column: Last value: Target configuration Override null value: Null value: File format: 0 : TEXT_FILE 1 : SEQUENCE_FILE 2 : PARQUET_FILE Choose: 0 Compression codec: 0 : NONE 1 : DEFAULT 2 : DEFLATE 3 : GZIP 4 : BZIP2 5 : LZO 6 : LZ4 7 : SNAPPY 8 : CUSTOM Choose: 0 Custom codec: Output directory: /user/admin/sqoop/output Append mode: 1 Throttling resources Extractors: 1 Loaders: 1 Classpath configuration Extra mapper jars: There are currently 0 values in the list: element# New job was successfully created with validation status OK and name mysql2hdfs sqoop:000> show job +----+------------+------------------------------------------+----------------------------+---------+ | Id | Name | From Connector | To Connector | Enabled | +----+------------+------------------------------------------+----------------------------+---------+ | 2 | mysql2hdfs | mysql-jdbc-link (generic-jdbc-connector) | hdfs-link (hdfs-connector) | true | +----+------------+------------------------------------------+----------------------------+---------+ sqoop:000>- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

【4】执行Job

sqoop:000> show job sqoop:000> start job -n mysql2hdfs- 1

- 2

显示持久的作业提交对象show submission show submission --j jobName show submission --job jobName --detail- 1

- 2

- 3

2、数据导出(Haoop->RDBMS)

这个示例演示可以参考我这篇文章:大数据Hadoop之——数据同步工具Sqoop7)卸载

helm uninstall sqoop -n sqoop kubectl delete ns sqoop --force- 1

- 2

git地址:https://gitee.com/hadoop-bigdata/sqoop-on-k8s

Sqoop on k8s 讲解与实战操作就先到这里了,有任何疑问欢迎留言或者私信,后续会持续更新【云原生+大数据】相关的文章,请小伙伴耐心等待~

-

相关阅读:

C++实现KNN和K-Means

HTML CSS JS 网页设计作业「我的家乡吉林」

ELK【elasticsearch+logstash+kibana】企业级日志分析系统

MeterSphere | 在接口自动化场景中,设置全局Token方法

C#开发五子棋游戏:从新手到高手的编程之旅

【Proteus仿真】【STM32单片机】汽车倒车报警系统设计

【实战技能】非技术背景经理的技术管理

Unity/C# 舍入的五种写法

力扣解法汇总946-验证栈序列

美国经济危机历史与展望

- 原文地址:https://blog.csdn.net/qq_35745940/article/details/127829478